Estimated read: 5–7 minutes

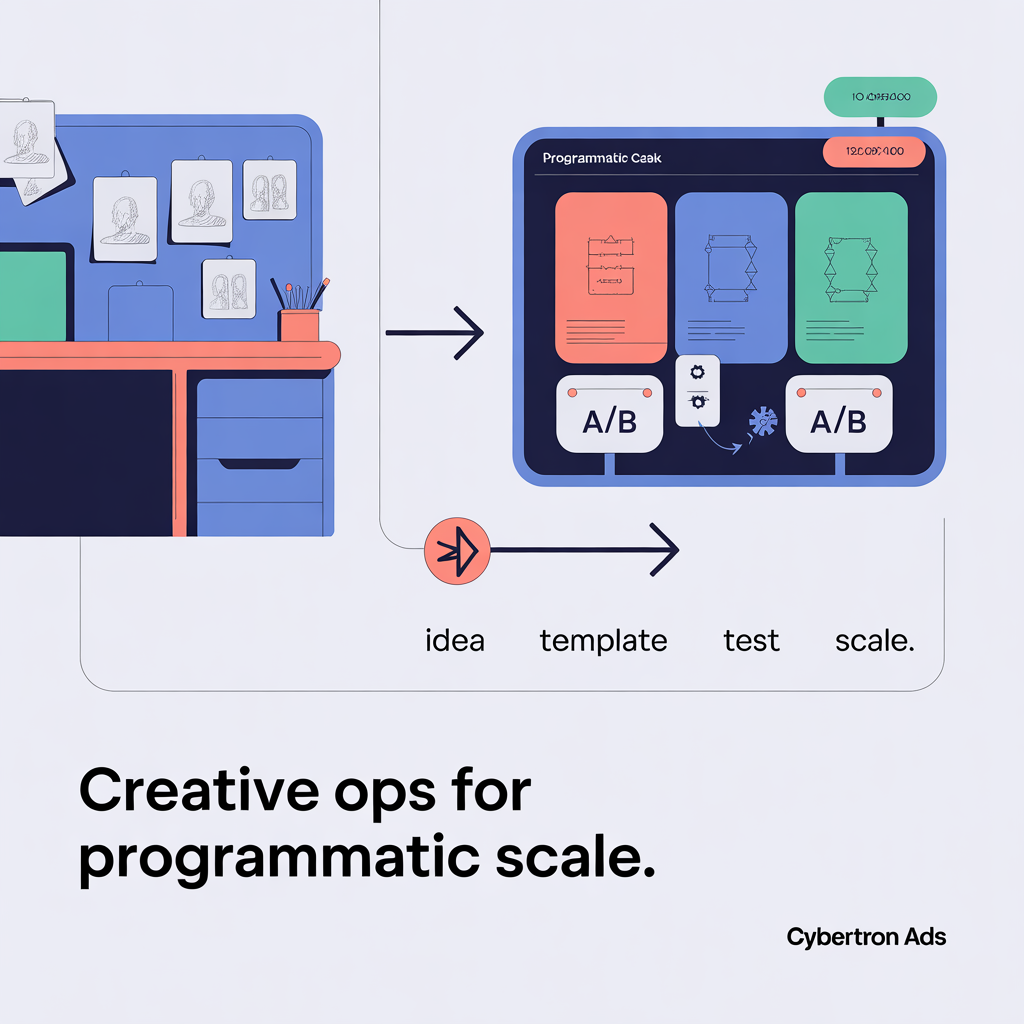

Creative is the differentiator in advertising — but scaling creative testing is where many teams fail. You can have great ideas, but without a system that designs, tests, measures and scales them, you’re left spinning cycles on guesswork. This guide gives you a pragmatic, operational approach to creative testing at scale: build a micro-test library, use DCO templates, adopt attention-first KPIs, and create the organizational glue that connects creative teams to programmatic ops.

At Cybertron Ads we help brands turn this into a repeatable engine — here’s the exact playbook.

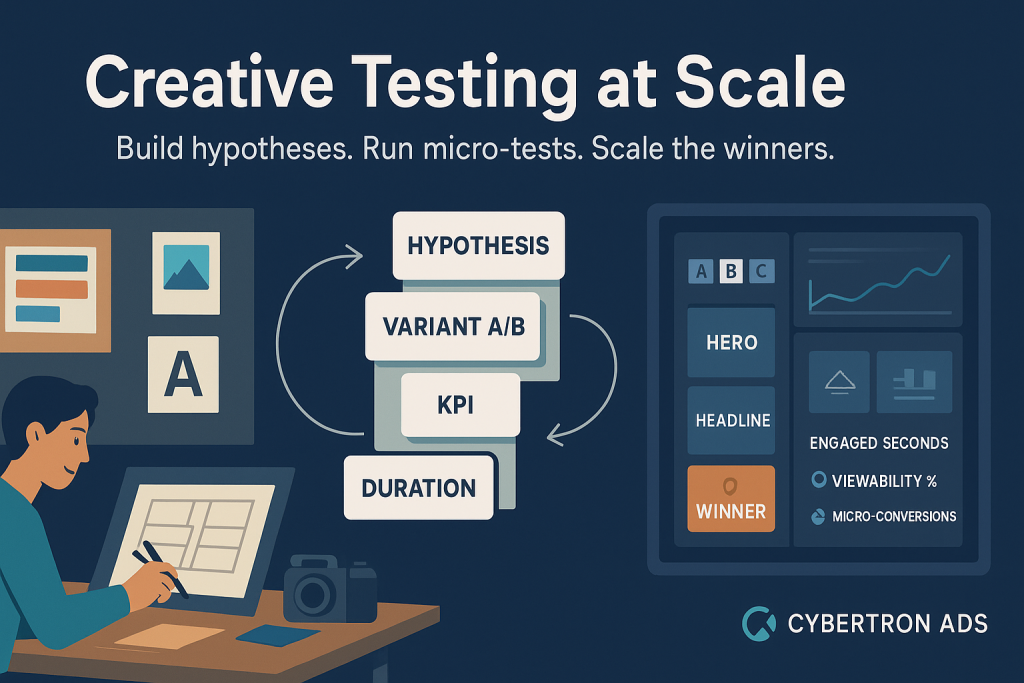

1. Start with a hypothesis-driven micro-test library

Big tests are slow. Micro-tests are fast, cheap, and informative.

What to include in your Micro-Test Library:

- Hypothesis card — one sentence: “If we show the product in-use vs on white background, view-time will increase.”

- Variable(s) — what you’ll change: hero image, headline, CTA, color, duration.

- Success metric — short-term metric (engaged seconds, completion rate), long-term (lift in assisted conversions).

- Creative asset — two variants (A vs B) ready to deploy.

- Audience & placement — where the test runs (in-feed mobile, social, programmatic OOH).

- Duration & budget — e.g., 7–14 days, minimal impressions to reach statistical signal.

- Learnings & next steps — a short template to record results and the decision.

Operate dozens of these in parallel. Keep the tests narrow — change one major element per micro-test — so your signal is clean.

2. Build DCO templates that make testing efficient

Dynamic Creative Optimization (DCO) lets you swap creative modules in real time. But to scale, you need modular templates built for testability.

A DCO template should be modular and strict:

- Hero image module (swap images: lifestyle, product-in-context, user-generated).

- Headline module (short, benefit, curiosity).

- Supporting copy module (one-line).

- CTA module (text + color).

- Badge / trust module (rating, “as seen in”).

- Data tokens (dynamic price, nearest store, local offer).

Two production rules:

- One major creative experiment per template — keep other modules stable.

- Automate reporting tags — every served variant automatically reports which module variant served.

This structure turns creative into data — and makes it cheap to run 20+ experiments across placements.

3. Measure attention first — then conversions

Clicks are noisy. Attention signals are more predictive of longer-term brand outcomes.

Attention-first KPI stack (recommended):

- Engaged Seconds (ES): continuous seconds ad was viewable + active.

- Viewability %: percent of impressions meeting viewability standard.

- Completion Rate (for video): percent of users who watch the full asset.

- Micro-conversion rate: e.g., map-open, ‘call’ tap, form-start.

- Assisted conversion lift / Brand lift: measured via holdouts or brand-lift studies.

For micro-tests, use ES and micro-conversions as primary signals. Only promote winners that both hold attention and move downstream actions.

4. Design a repeatable experiment cadence

Speed matters. Here’s a practical cadence that balances speed and statistical power:

- Day 0–3 — Prep: Create variants, QA, set tracking tags, and define sample size.

- Day 4–14 — Run: Run the micro-test. Monitor for anomalies (traffic spikes, bot issues).

- Day 15 — Analyze: Pull ES distribution, completion, and micro-conversions. Use holdout/control where possible.

- Day 16 — Decide: Promote winning creative into a DCO template for scaling, archive the loser, and document the learning.

Repeat weekly. With this cadence you can run 12–24 micro-tests in a quarter and iterate quickly.

5. Roles & ops — make it real

Operationalizing testing requires clear roles:

- Creative Lead: owns the idea, narrative, and assets.

- Creative Ops / Producer: builds DCO templates and variants.

- Media Strategist: picks placements, budgets, and audiences.

- Data Analyst / Measurement Lead: validates ES, flags anomalies, runs lift tests.

- Programmatic Ops: configures the DCO feed, trafficing, and frequency controls.

- Product Owner / Growth Lead: makes the go/no-go scaling decisions.

Set 30–60 minute weekly syncs to unblock tests and commit to decisions — not “we’ll revisit later.”

6. Example experiment matrix (practical)

| Test | Variant A | Variant B | Primary KPI | Duration |

|---|---|---|---|---|

| Hero image | Product on white | In-use lifestyle | Engaged Seconds | 10 days |

| Headline tone | Feature-led | Benefit-led | Micro-conversions | 7 days |

| Video length | 6s | 15s | Completion Rate | 14 days |

| CTA color | Blue | Orange | Click-to-map | 7 days |

Run these simultaneously across different audiences to capture cross-segment effects.

7. Avoid common pitfalls

- Too many variables at once. Change one major element per test.

- Skipping quality control. Broken dynamic tokens or incorrect alt text kills tests.

- Ignoring signal quality. Vet publishers for viewability and fraud.

- Not archiving learnings. Store results in a searchable test repository for future reference.

8. Translate learnings into scale

When a variant consistently wins on attention and micro-conversions:

- Promote the variant into the core DCO template as the new default.

- Run an incrementality holdout (5–15% holdout) to measure true lift.

- Scale gradually by doubling spend for 7 days and monitoring for fatigue.

- Rotate fresh variants every 4–6 weeks to prevent creative decay.

9. Example mini-case (hypothetical)

A retail client tested “product-on-white” vs “product-in-use.” Results after 10 days:

- Engaged Seconds: 1.4s (white) vs 3.2s (in-use)

- Micro-conversion (map opens): 0.4% vs 1.2%

Decision: promote “in-use” creative, migrate to DCO templates with local store token, and scaled mobile placements — resulting in a 40% lift in store visits month-over-month (hypothetical illustrative numbers).

10. Tech & tooling recommendations

Start with these capabilities (you can substitute vendors):

- DCO provider (creative template engine).

- Attention measurement (viewability + engaged seconds SDK).

- Programmatic DSP with DCO support.

- Analytics / BI for test tracking and dashboards.

- Campaign repository (simple spreadsheet or lightweight wiki) for hypothesis cards.

11. How Cybertron Ads helps

We act as the glue: translating creative intent into programmatic experiments and meaningful measurement. Our services include:

- Building micro-test libraries and DCO templates.

- Managing experiment cadence and measurement.

- Providing attention-first dashboards and incrementality testing.

- Scaling winners across OOH, mobile, and digital channels.

If you want a ready-to-run 30-day creative testing sprint (templates, tracking plan, and initial experiments), we’ll set it up and run it with you.

Quick checklist to start this week

- Create 5 hypothesis cards.

- Build 2 DCO templates with modular modules.

- Define primary attention KPI (ES) and micro-conversions.

- Run your first two micro-tests (7–14 days).

- Document results and prepare to scale the winner.

Creative testing at scale is less about magic and more about process. Build the library, standardize templates, measure attention first, and institutionalize fast decisions. Do that, and creative becomes a repeatable engine that feeds predictable growth.

Want Cybertron Ads to help design your first micro-test library and DCO template this month? Let’s book a quick call and get your testing engine running.